Overview

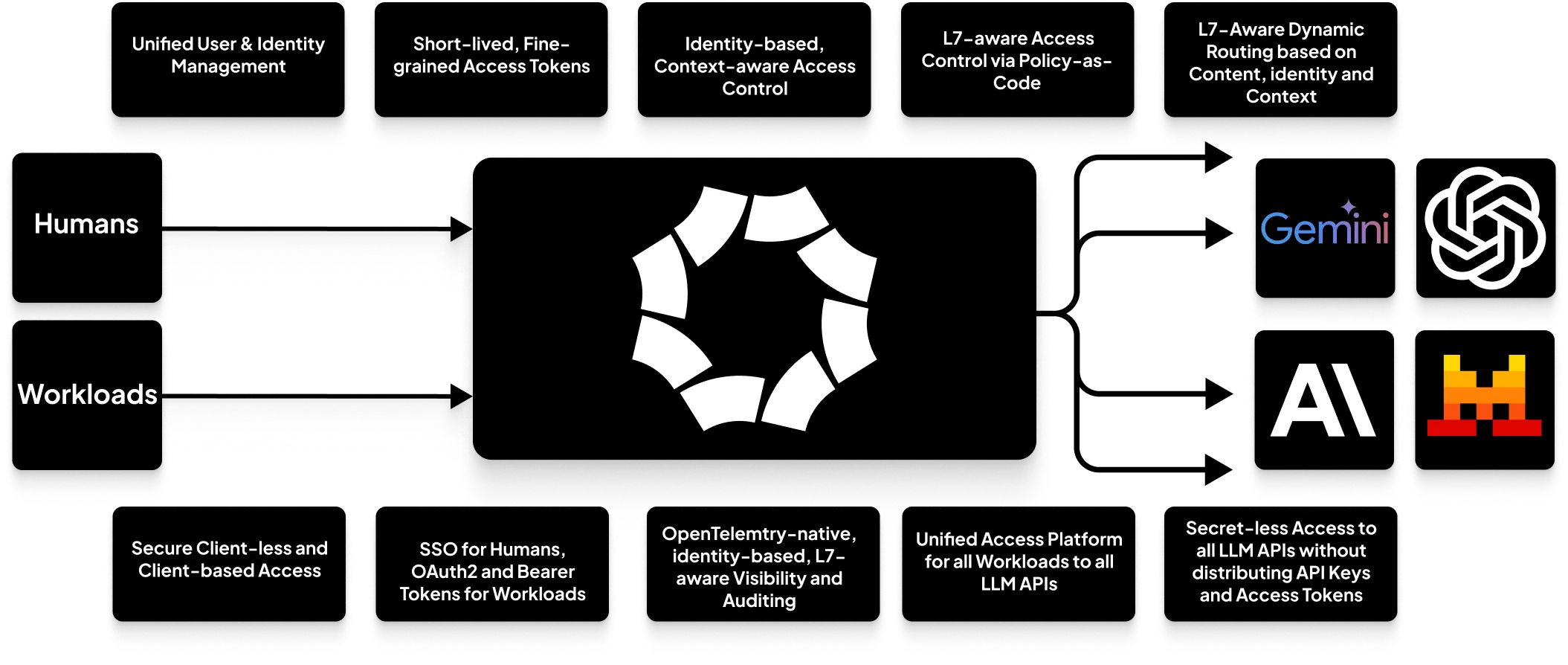

Using Octelium provides a complete self-hosted, open source infrastructure to build your AI gateways to both SaaS as well as self-hosted AI LLM models. When used as an AI gateway, Octelium provides the following:

- A unified scalable infrastructure for all your applications written in any programming language, to securely access any AI LLM API in a secretless way without having to manage and distribute API keys and access tokens (read more about secretless access here).

- Self-hosted your models via managed containers (read more here). You can see a dedicated example for Ollama here.

- Centralized identity-based, application-layer (L7) aware ABAC access control on a per-request basis (read more about access control here).

- Unified, scalable identity management for both

HUMANas well asWORKLOADUsers (read more here). - Request/output sanitization and manipulation with Lua scripts and Envoy ExtProc compatible interfaces (read more here) to build you ad-hoc rate limiting, semantic caching, guardrails and DLP logic.

- OpenTelemetry-native, identity-based, L7 aware visibility and auditing that captures requests and responses including serialized JSON body content.

- GitOps-friendly declarative, programmable management (read more here).

A Simple Gateway

This is a simple example where you can have a Gemini API Service, publicly exposed (read more about the public clientless BeyondCorp mode here). First we need to create a Secret for the CockroachDB database's password as follows:

octeliumctl create secret gemini-api-key

Now we create the Service for the Gemini API as follows:

1kind: Service2metadata:3name: gemini4spec:5mode: HTTP6isPublic: true7config:8upstream:9url: https://generativelanguage.googleapis.com10http:11path:12addPrefix: /v1beta/openai13auth:14bearer:15fromSecret: gemini-api-key

You can now apply the creation of the Service as follows (read more here):

octeliumctl apply /PATH/TO/SERVICE.YAML

Client Side

Octelium enables authorized Users (read more about access control here) to access the Service both via the client-based mode as well as publicly via the clientless BeyondCorp mode (read more here). In this guide, we are going to use the clientless mode to access the Service via the standard OAuth2 client credentials in order for your workloads that can be written in any programming language to access the Service without having to use any special SDKs or have access to external clients All you need is to create an OAUTH2 Credential as illustrated here. Now, here is an example written in Typescript:

1import OpenAI from "openai";23import { OAuth2Client } from "@badgateway/oauth2-client";45async function main() {6const oauth2Client = new OAuth2Client({7server: "https://<DOMAIN>/",8clientId: "spxg-cdyx",9clientSecret: "AQpAzNmdEcPIfWYR2l2zLjMJm....",10tokenEndpoint: "/oauth2/token",11authenticationMethod: "client_secret_post",12});1314const oauth2Creds = await oauth2Client.clientCredentials();1516const client = new OpenAI({17apiKey: oauth2Creds.accessToken,18baseURL: "https://gemini.<DOMAIN>",19});2021const chatCompletion = await client.chat.completions.create({22messages: [23{ role: "user", content: "How do I write a Golang HTTP reverse proxy?" },24],25model: "gemini-2.0-flash",26});2728console.log("Result", chatCompletion);29}

Dynamic Routing

You can also route to a certain LLM provider based on the content of the request body (read more about dynamic configuration here), here is an example:

1kind: Service2metadata:3name: total-ai4spec:5mode: HTTP6isPublic: true7config:8upstream:9url: https://generativelanguage.googleapis.com10http:11enableRequestBuffering: true12body:13mode: JSON14path:15addPrefix: /v1beta/openai16auth:17bearer:18fromSecret: gemini-api-key19dynamicConfig:20configs:21- name: openai22upstream:23url: https://api.openai.com24http:25path:26addPrefix: /v127auth:28bearer:29fromSecret: openai-api-key30- name: deepseek31upstream:32url: https://api.deepseek.com33http:34path:35addPrefix: /v136auth:37bearer:38fromSecret: deepseek-api-key39rules:40- condition:41match: ctx.request.http.bodyMap.model == "gpt-4o-mini"42configName: openai43- condition:44match: ctx.request.http.bodyMap.model == "deepseek-chat"45configName: deepseek46# Fallback to the default config47- condition:48matchAny: true49configName: default

For more complex and dynamic routing rules (e.g. message-based routing), you can use the full power of Open Policy Agent (OPA) (read more here).

Access Control

When it comes to access control, Octelium provides a rich layer-7 aware, identity-based, context-aware ABAC access control on a per-request basis where you can control access based on the HTTP request's path, method, and even serialized JSON using policy-as-code with CEL and Open Policy Agent (OPA) (You can read more in detail about Policies and access control here). Here is a generic example:

1kind: Service2metadata:3name: my-api4spec:5mode: HTTP6config:7upstream:8url: https://api.example.com9http:10enableRequestBuffering: true11body:12mode: JSON13authorization:14inlinePolicies:15- spec:16rules:17- effect: ALLOW18condition:19all:20of:21- match: ctx.user.spec.groups.hasAll("dev", "ops")22- match: ctx.request.http.bodyMap.messages.size() < 423- match: ctx.request.http.bodyMap.model in ["gpt-3.5-turbo", "gpt-4o-mini"]24- match: ctx.request.http.bodyMap.temperature < 0.7

This was just a very simple example of access control for an OpenAI-compliant LLM API. Furthermore, you can use Open Policy Agent (OPA) to create much more complex access control decisions.

Request/Response Manipulation

You can also use Octelium's plugins, currently Lua scripts and Envoy's ExtProc, to sanitize and manipulate your request and responses (read more about HTTP plugins here). Here is an example:

1kind: Service2metadata:3name: safe-gemini4spec:5mode: HTTP6isPublic: true7config:8upstream:9url: https://generativelanguage.googleapis.com10http:11enableRequestBuffering: true12body:13mode: JSON14path:15addPrefix: /v1beta/openai16plugins:17- name: main18condition:19match: ctx.request.http.path == "/chat/completions" && ctx.request.http.method == "POST"20lua:21inline: |22function onRequest(ctx)23local body = json.decode(octelium.req.getRequestBody())2425if body.temperature > 0.7 then26body.temperature = 0.727end2829if body.model == "gemini-2.5-pro" then30body.model = "gemini-2.5-flash"31end3233if #body.messages > 4 then34octelium.req.exit(400)35return36end3738body.messages[0].role = "system"39body.messages[0].content = "You are a helpful assistant that provides concise answers"4041for idx, message in ipairs(body.messages) do42if strings.lenUnicode(message.content) > 500 then43octelium.req.exit(400)44return45end46end4748if body.temperature > 0.4 then49local c = http.client()50c:setBaseURL("http://guardrail-api.default.svc")51local req = c:request()52req:setBody(json.encode(body))53local resp, err = req:post("/v1/check")54if err then55octelium.req.exit(500)56return57end5859if resp:code() == 200 then60local apiResp = json.decode(resp:body())61if not apiResp.isAllowed then62octelium.req.exit(400)63return64end65end66end6768if strings.contains(strings.toLower(body.messages[1].content), "paris") then69local c = http.client()70c:setBaseURL("http://semantic-caching.default.svc")71local req = c:request()72req:setBody(json.encode(body))73local resp, err = req:post("/v1/get")74if err then75octelium.req.exit(500)76return77end7879if resp:code() == 200 then80local apiResp = json.decode(resp:body())81octelium.req.setResponseBody(json.encode(apiResp.response))82octelium.req.exit(200)83return84end85end8687octelium.req.setRequestBody(json.encode(body))88end

Observability

Octelium also provides OpenTelemetry-ready, application-layer L7 aware visibility and access logging in real time (see an example for HTTP here) that includes capturing request/response serialized JSON body content (read more here). You can read more about visibility here.

Here are a few more features that you might be interested in:

- Routing not just by request paths, but also by header keys and values, request body content including JSON (read more here).

- Request/response header manipulation (read more here).

- Cross-Origin Resource Sharing (CORS) (read more here).

- Secretless access to upstreams and injecting bearer, basic, or custom authentication header credentials (read more here).

- Application layer-aware ABAC access control via policy-as-code using CEL and Open Policy Agent (read more here).

- OpenTelemetry-ready, application-layer L7 aware auditing and visibility (read more here).