Overview

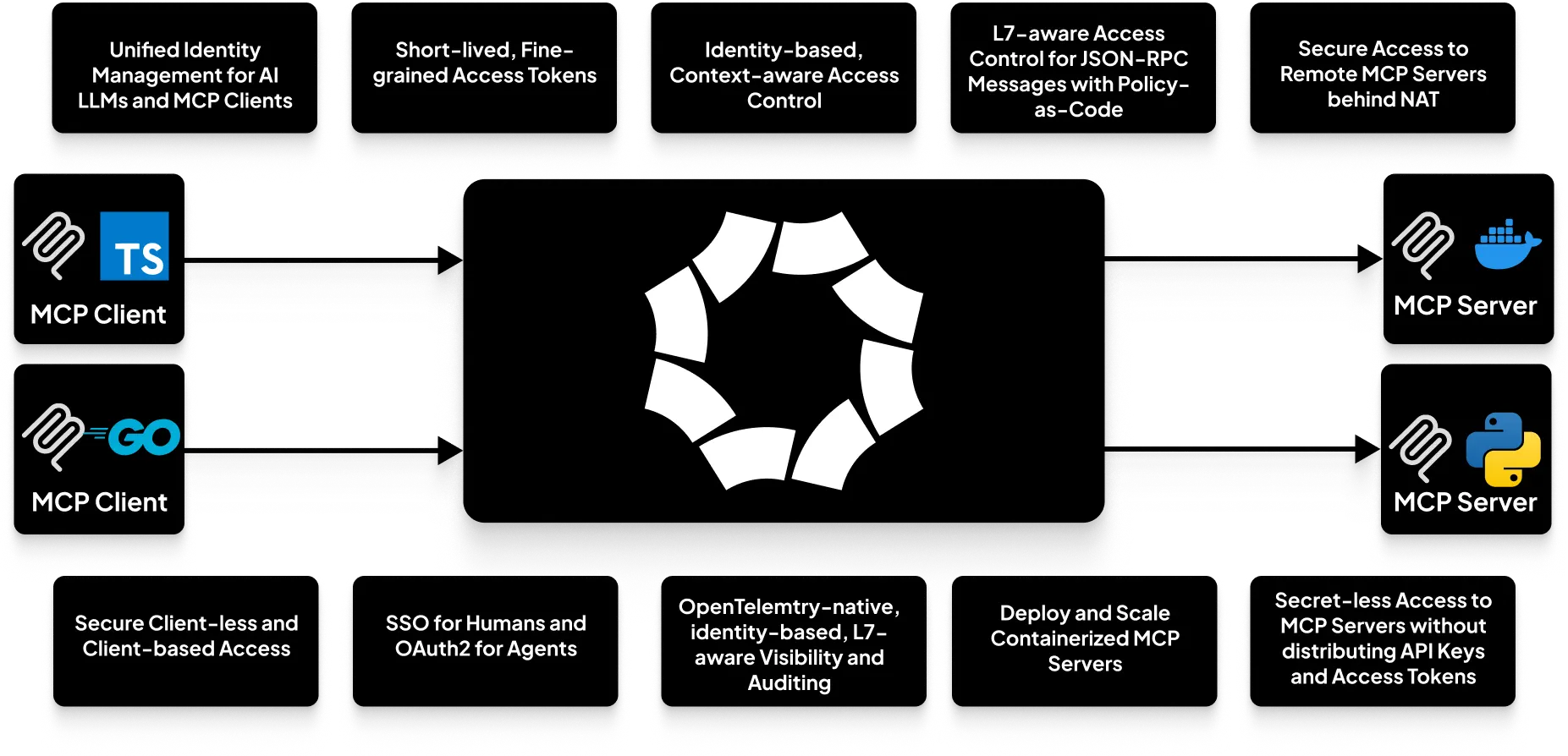

Octelium provides a unified, secure, free and open source, scalable, self-hosted infrastructure to build your MCP-based architectures, MCP gateways and modern agentic MCP and A2A meshes at scale. Octelium simply can operate as a MCP gateway for simple use cases as well as a scalable enterprise MCP gateway under strict security requirements with hundreds or even thousands of MCP servers and clients. Octelium provides the following:

- A unified scalable infrastructure for all your MCP clients, written in any programming language, to securely access all MCP servers running behind NAT anywhere (e.g. private clouds, IoT, your own laptop, etc...), via both client-based as well as clientless access over standard OAuth2 and bearer authentication.

- Deploy and scale your containerized SSE/streamable HTTP-based MCP servers in constrained Kubernetes pods managed by the Octelium Cluster (read more about managed containers here).

- Centralized identity-based, application-layer (L7) aware access control that is based on the content of JSON-RPC messages (read more about access control here).

- Unified, scalable identity management for all your MCP clients.

- Request/output sanitization and manipulation of MCP JSON-RPC messages via Lua scripts and Envoy ExtProc plugins (read more here).

- OpenTelemetry-native, identity-based, L7 aware visibility and auditing that captures requests and responses including serialized JSON body content.

- Seamless horizontal scalability and availability since Octelium operates on top of Kubernetes (read more about how Octelium works here).

- GitOps-friendly declarative, programmable management (read more here).

In short, Octelium not only completely takes care of providing secure access to your MCP in any environment behind NAT, but it also enables you to offload identity management and authentication, L7-aware authorization, MCP server deployment and scalability, input/output MCP message validation and manipulation, as well as visibility out of the codebase of your MCP clients and servers to focus solely on your business logic.

MCP Servers

In this guide we are going to use a very simply MCP server that provides just addition and subtraction operations and is serving over streamable HTTP transport. The MCP server is written in Python via FastMCP as follows:

1import asyncio2import os34from fastmcp import FastMCP56mcp = FastMCP("Demo Octelium MCP Server")78@mcp.tool()9def add(a: int, b: int) -> int:10return a + b1112@mcp.tool()13def subtract(a: int, b: int) -> int:14return a - b1516if __name__ == "__main__":17asyncio.run(18mcp.run_async(19transport="streamable-http",20host="0.0.0.0",21port=os.getenv("PORT", 8080),22)23)

We now explore 3 possibilities on how to use our MCP server as an upstream for an Octelium Service that represents it (read more about Services here):

- The MCP server can be running in any internal network behind NAT (e.g. private cloud, your own laptop, IoT, etc...). Here is a simple example where the upstream is running at

http://localhost:8080and is remotely served by a connectedocteliumclient used by the Usermcp-01(read more about serving Services via connected Users here):

1kind: Service2metadata:3name: my-mcp4spec:5port: 80806mode: HTTP7isPublic: true8config:9upstream:10url: http://localhost:808011user: mcp-01

The isPublic field enables the public clientless (i.e. BeyondCorp) access mode. Read more here.

- You can also deploy and scale your containerized streameable HTTP-based MCP server and serve it as a Service by reusing the underlying Kubernetes infrastructure that runs the Octelium Cluster (read more about managed containers here), including Docker images from private container registries (e.g. Docker register, GitHub's ghcr, etc...). Managed containers are deployed, scaled via Octelium (read more about managed containers here), and served directly as a Service upstream. Here is an example:

1kind: Service2metadata:3name: my-mcp4spec:5mode: HTTP6isPublic: true7config:8upstream:9container:10port: 808011image: ghcr.io/org/my-mcp:1.2.312credentials:13usernamePassword:14username: ghcr-username15password:16fromSecret: ghcr-token17resourceLimit:18cpu:19millicores: 100020memory:21megabytes: 2000

Octelium also provides dynamic configuration to route to different upstreams (e.g. multiple MCP server versions) based on identity and/or context (read more here) via policy-as-code on a per-request basis. You can even use the managed container mode to simultaneously deploy multiple containers and route among them (read more here).

- The third possibility is if your SSE/streamable HTTP MCP server is listening publicly over the internet but protected by some L7 credential such as an API key or a bearer access token. Octelium also supports secretless access for Users to public MCP servers that are protected by standard bearer access tokens, basic authentication, API keys set in custom headers as well as OAuth2 client credential flows without having to manage and distribute such credentials to your Users. You can read more here. Here is a simple example for an MCP server that is protected by bearer authentication:

1kind: Service2metadata:3name: my-mcp4spec:5mode: HTTP6isPublic: true7config:8upstream:9url: https://my-mcp.example.com10http:11auth:12bearer:13fromSecret: my-api-key

Now whether your MCP server is listening behind NAT or deployed as a manged container, you can apply the creation of your MCP server Service via the octeliumctl apply command (read more here) as follows:

octeliumctl apply /PATH/TO/SERVICE.YAML

If you have many MCP servers that need to be categorized by a certain domain or a functionality, you might want to take a look at Namespaces (read more here) where you can organize your MCP server Services and affect their hostnames as well as access control to a whole set of Services that share a certain purpose or functionality according to your needs.

MCP Clients

Now we move on to the client-side of MCPs to understand how to actually securely access our MCP server. Octelium provides both client-based mode via the octelium connect command to access your Services privately over WireGuard/QUIC tunnels via the octelium CLI or container (read more here), as well as via clientless access mode (read more here) for your MCP clients to access all your MCP servers. When it comes to user and identity management, Octelium supports two User types:

-

HUMANUsers who can access Service usually by authenticating to the Cluster via their web browsers through an OpenID Connect, SAML 2.0 or GitHub OAuth2 IdentityProvider (read more here). -

WORKLOADUsers, used by non-human entities such as servers, containers and applications written in any programming language. Such Users can authenticate to the Cluster through authentication tokens (read more here), OpenID Connect assertions, OAuth2 client credentials (read more here) and or are issued access tokens directly (read more here).

Clientless Access

The main value of using Octelium is providing a unified and scalable identity management for all your clients where you can have a single standard OAuth2 client credential or bearer authentication for your MCP clients to access all authorized MCP servers without having to use any special SDKs or clients from the clients' side or even having to be aware of the Octelium's Cluster existence at all.

MCP clients can use the standard OAuth2 client credentials flow to obtain a bearer access token and publicly access the MCP server Service. You can read in detail on how to issue an OAuth client credential to access a Service here.

You can also directly issue access token Credentials here and use them directly in standard bearer authentication.

Access Control

Now we move on to access control. Octelium's application-layer (L7) awareness seamlessly enables you to control access at the HTTP-layer based on HTTP request paths, headers, methods and more importantly in our use case for MCP, JSON body of the requests. Here is an example:

1kind: Service2metadata:3name: my-mcp4spec:5port: 80806mode: HTTP7isPublic: true8config:9upstream:10url: https://mcp.example.com11http:12enableRequestBuffering: true13body:14mode: JSON15maxRequestSize: 10000016authorization:17inlinePolicies:18- spec:19rules:20- effect: ALLOW21condition:22all:23of:24- match: ctx.request.http.bodyMap.jsonrpc == "2.0"25- match: ctx.request.http.bodyMap.method == "tools/call"26- match: ctx.request.http.bodyMap.params.name in ["add", "subtract"]27- match: ctx.request.http.bodyMap.params.arguments.a < 100028- match: ctx.request.http.bodyMap.params.arguments.b > 1000

You are not restricted to attaching a Policy or an InlinePolicy to the Service. You can define your own Polices and InlinePolicies and attach them to specific Users representing specific MCP clients or hosts. You can also attach your Policies to certain Groups of Users (read more here) or Namespaces (read more here).

Visibility

Octelium provides OpenTelemetry-native, application-layer L7 aware visibility and access logging in real time (see an example for HTTP here) that includes capturing request/response serialized JSON body content (read more here). You can read more about visibility here. Here is an example of a JSON AccessLog (see a full example here) of a request highlighting the request and response details:

1{2"apiVersion": "core/v1",3"kind": "AccessLog",4"metadata": {5// Omitted for brevity6},7"entry": {8// Omitted for brevity9},10"info": {11"http": {12"request": {13"path": "/mcp",14"userAgent": "node",15"method": "POST",16"bodyBytes": 124,17"uri": "/mcp",18"bodyMap": {19"jsonrpc": "2.0",20"method": "tools/call",21"params": {22"name": "add",23"_meta": {24"progressToken": 325},26"arguments": {27"a": 4,28"b": 729}30},31"id": 332}33},34"response": {35"code": 200,36"bodyBytes": 151,37"contentType": "text/event-stream"38},39"httpVersion": "HTTP11"40}41}42}43}

Request and Response Manipulation

You can also use Octelium's plugins, currently mainly Lua scripts and Envoy's ExtProc, to sanitize your MCP request parameters, validate request's parameters using your own defined JSON schema, modify and manipulate responses (read more about HTTP plugins here). Here is an example using Lua:

1kind: Service2metadata:3name: my-mcp4spec:5port: 80806mode: HTTP7isPublic: true8config:9upstream:10url: https://mcp.example.com11http:12plugins:13- name: check-inputs14condition:15match: ctx.request.http.path == "/mcp"16lua:17inline: |18function onRequest(ctx)19local body = json.decode(octelium.req.getRequestBody())20body.params.arguments.userUID = ctx.user.metadata.uid21body.params.arguments.userEmail = ctx.user.spec.email22if body.params.name == "another-add" then23body.params.name = "add"24end2526if body.params.arguments.a < 0 then27body.params.arguments.a = 028end2930if body.params.arguments.b > 1000 then31body.params.arguments.b = 100032end3334if body.params.name == "sub" then35local c = http.client()36c:setBaseURL("http://check-mcp.default.svc")37local req = c:request()3839local schemaResp, err = req:post("/v1/get-json-schema")40if err then41octelium.req.exit(500)42return43end4445if schemaResp:code() == 200 then46if not json.isSchemaValid(schemaResp:body(), json.encode(body.params)) then47octelium.req.exit(400)48return49end50end5152req:setBody(json.encode(body.params))53local resp, err = req:post("/v1/check-subtract-params")54if err then55octelium.req.exit(500)56return57end5859if resp:code() == 200 then60local apiResp = json.decode(resp:body())61if not apiResp.userIsAllowed then62octelium.req.exit(403)63return64end65end66end6768octelium.req.setRequestBody(json.encode(body))69end

Octelium also provides other plugins (read more about HTTP plugins here) such as rate limiting, caching, JSON schema validation and direct response that can be used to control your MCP client requests. Here is another example:

1kind: Service2metadata:3name: my-mcp4spec:5port: 80806mode: HTTP7isPublic: true8config:9upstream:10url: https://mcp.example.com11http:12plugins:13- name: main-rate-limit14condition:15matchAny: true16ratelimit:17limit: 10018window:19minutes: 220- name: validate-call21condition:22match: ctx.request.http.bodyMap.params == "sub"23jsonSchema:24inline: <YOUR_JSON_SCHEMA>

Here are a few more features that you might be interested in:

- Request/response header manipulation (read more here).

- Application layer-aware ABAC access control via policy-as-code using CEL and Open Policy Agent (read more here).

- Exposing the API publicly for anonymous access (read more here).

- OpenTelemetry-ready, application-layer L7 aware auditing and visibility (read more here).