Introduction

Octelium is a unified zero trust architecture (ZTA) that is built to be generic enough to operate as a zero-config remote access VPN, a Zero Trust Network Access (ZTNA)/BeyondCorp platform, ngrok alternative, an API gateway, an AI/LLM gateway, an infrastructure for MCP gateways and A2A architectures/meshes, a PaaS-like platform, a Kubernetes gateway/ingress alternative and even as a homelab infrastructure.

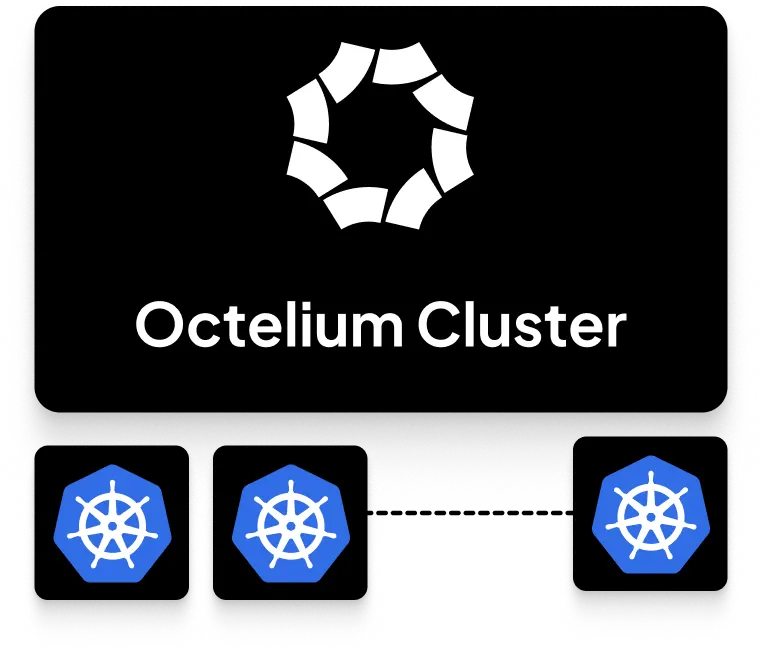

A single Octelium system is called a Cluster and is defined and addressed by its domain (e.g. example.com, octelium.example.com, etc...). The Cluster provides secure secretless access, for Users, both humans and workloads used by non-human entities, to any private/internal resource behind NAT in any environment as well as to publicly protected resources such as SaaS APIs and databases, via identity-based, context-aware, application-layer (L7)-aware access control on a per-request basis.

An Octelium Cluster runs on top of Kubernetes. The Cluster can run on a single-node Kubernetes cluster that is installed on top of a single cheap EC2 or DigitalOcean VM instance (see the quick installation guide here). In a typical production-grade environment, however, the Cluster should typically run on top of a scalable on-prem or a managed Kubernetes cluster. It's noteworthy that even though Octelium operates on top of Kubernetes, you don't really need to have experience with Kubernetes to manage, operate or use Octelium.

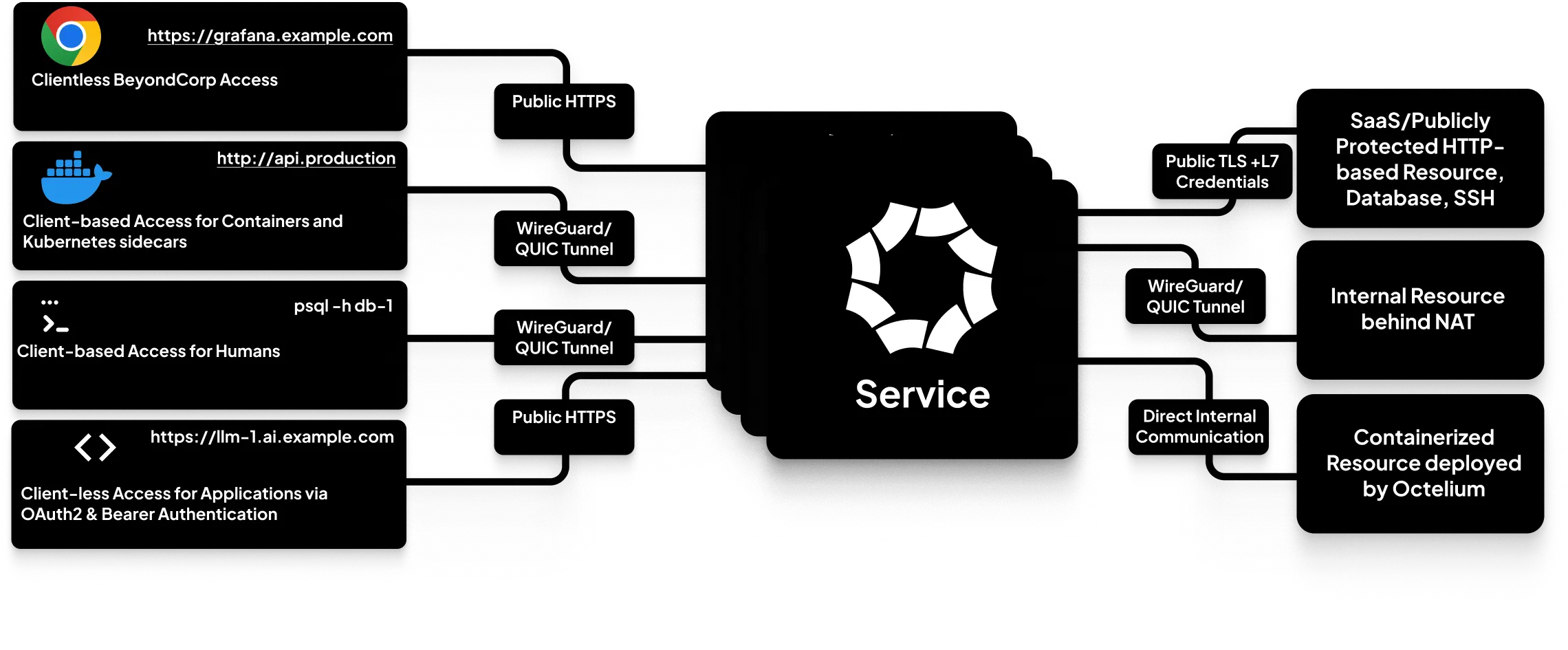

Each resource that is protected by the Cluster is represented by a Service. A Service is implemented by an identity-aware proxy (IaP) abstracting all dynamic network-layer details of the protected resource (i.e. upstream) behind it. Users access protected resources through Services via two zero-trust network access (ZTNA) modes:

-

Client-based where Users use a lightweight client (namely the

octeliumCLI tool) to connect to the Cluster through WireGuard tunnels (as well as experimentally QUIC-based tunnels) and access Services. This mode simply acts as a zero-config VPN from the User's perspective where Users can address Services via stable private FQDNs and hostnames assigned by the Cluster. You can read more in detail about connecting to Clusters here. -

Clientless (also called BeyondCorp) where Users can access Services through an internet facing reverse proxy without the need for any client to be installed at their side. Not only does this mode enable human Users to access web-based Services via their browsers, but it also enables workload Users to directly access any HTTP-based Service such as APIs, Kubernetes clusters, gRPC services, etc... via standard OAuth2 client credentials flow (read more here) as well as directly via bearer access tokens (read more here). In other words, your applications written in any programming languages can securely access such Services without having to use any additional client or special SDK. You can read more about the clientless BeyondCorp mode here. Moreover, Octelium can also provide public anonymous access (read more here) that can be useful for hosting use cases, for example.

The Cluster is designed to be managed in a centralized, declarative as well as programmatic way that is very similar to the way Kubernetes itself is managed. The Cluster administrators can use one command (namely octeliumctl apply) to (re)produce the entire state of the Cluster, enabling the Cluster's administrators to define the Cluster resources in YAML files and store them in Git repositories where the entire state can be updated/rolled back effortlessly in a similar way to other GitOps friendly systems such as Kubernetes (read more here).

The Cluster consists of various components which can be classified into a data-plane and a control-plane. In a typical production multi-node Kubernetes environment, the Cluster should have at least one Kubernetes node dedicated to the control-plane. The Cluster uses one or more kubernetes nodes for its data-plane. Each data-plane Kubernetes node is used as a Gateway by the Cluster acting as a host for the Services running on it.

Data Plane Overview

Human Users can authenticate themselves using OpenID Connect or SAML IdP IdentityProviders (read more about IdentityProviders here) and workload Users can authenticate themselves via authentication tokens (read more here) or federated OpenID Connect-based assertions (read more here). Once authenticated, a Session is created for that User and is tied to a short-lived (4 hours by default but it is configurable via the ClusterConfig) access token credential representing the Session, which enables access to protected resources through Services if the request is authorized (read more here).

Now let's understand how the flow of a request from a User to a protected resource through its Service is done.

From the User to the Service

When a User starts accessing a Service, the data-plane flow takes different but equivalent paths to reach the Service depending on the mode ( i.e whether privately using the client-based mode via the octelium CLI tool or publicly via the clientless mode) as follows:

- In the client-based mode (i.e. via the

octelium connectcommand as illustrated here), a User is connected to the Cluster through WireGuard/QUIC tunnels to all of its Gateways. Whenever the User accesses a Service, the request traffic is carried from the User's side over the internet through the tunnel to the Gateway hosting the Service depending on the Service's private address, and once it reaches the Cluster's side of the tunnel (i.e. the Gateway), the inner traffic is de-encapsulated and forwarded to the corresponding Service according to its private IP address.

- In the clientless BeyondCorp mode, the request is carried out by addressing the Service using its public FQDN like any public resource with a public DNS entry, the request reaches the Cluster through its frontend reverse-proxy component called Ingress which forwards the requests to the corresponding Service based on its FQDN.

At the Service

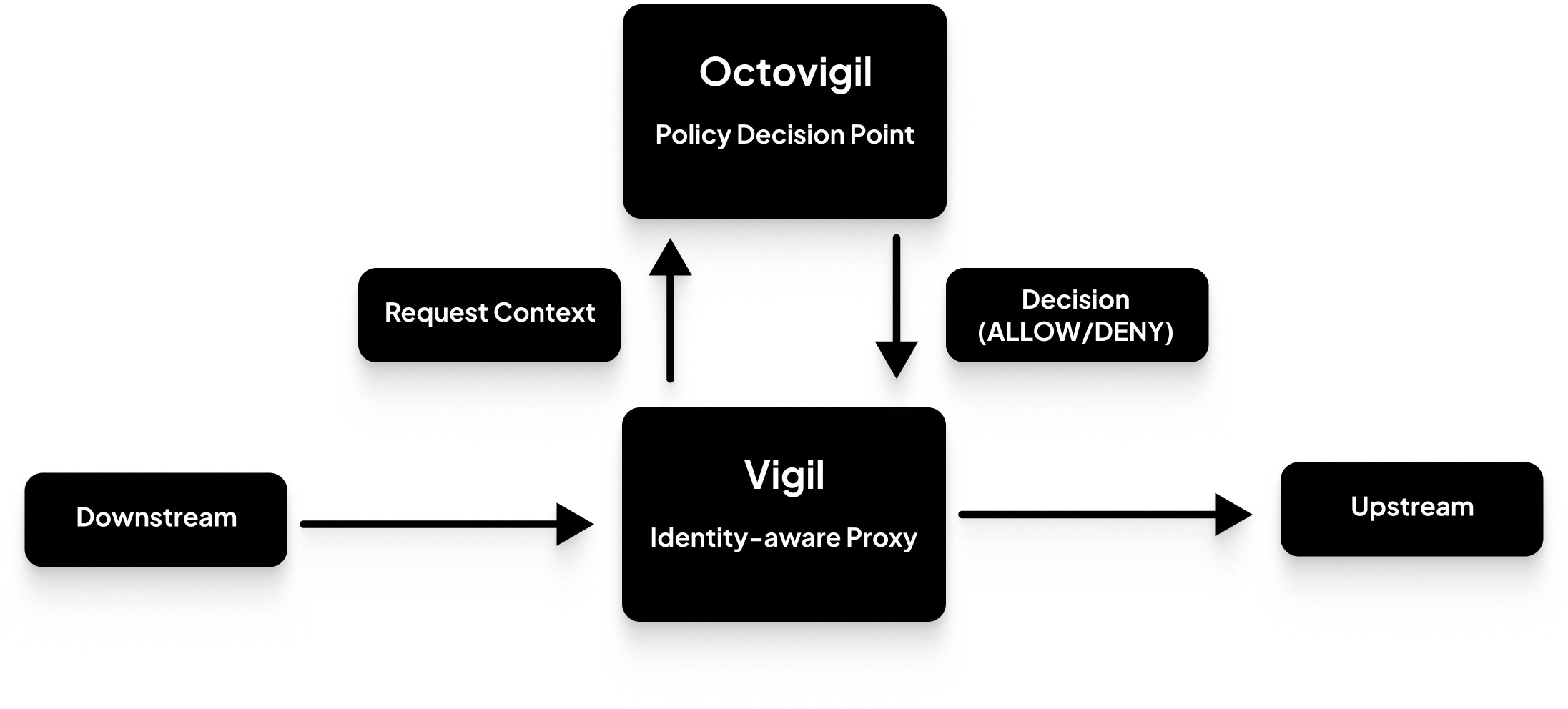

A Service is implemented by an identity-aware proxy (IAP) via a component called Vigil that runs as a Kubernetes pod inside a Gateway (i.e. a data-plane Kubernetes node). Each Service belongs to a Namespace which acts as the parent of its Services according to a common functionality (e.g. project names, environments such as production or staging, etc...) as well as being the parent domain name for such Services (read more here). You can read in detail about Namespaces here.

For every new request coming from the User, Vigil builds up the request context, which is simply the information required to authenticate and identify the User's Session as well as other information that is tied to the request such as application-layer specific information (e.g. HTTP request headers, path, method, etc...), Vigil then forwards such information to another component called Octovigil. Octovigil is a policy decision point (PDP); it authenticates and then authorizes the request through evaluating all Policies required to control the access of that specific request. Once Octovigil computes the decision as to whether request is allowed or denied, it forwards the decision back to Vigil. If the request is allowed, Vigil proceeds with the request to the actual protected upstream resource. You can read in detail about access control and Policy management here.

Unlike in VPNs which operate and enforce access control at layer-3 using segmentation, Octelium's application-layer awareness enables it to understand various layer-7 protocols such as HTTP-based Services including web apps, APIs, gRPC services and Kubernetes clusters, as well as SSH, DNS and PostgreSQL-based and MySQL-based databases. Application-layer awareness simply unlocks new capabilities across 4 key areas:

-

Access control Vigil is capable of extracting layer-7 information that can be taken into account in your Policies. For example, in HTTP-based Services, this can include access control by request headers, method, path, serialized JSON body content, etc... (read more here). For Kubernetes, this can include access control by the resource, namespace, verb, etc... (read more here). For PostgreSQL and MySQL, this can include access control by users, databases and queries (read more here and here). Octelium provides you, via Octovigil, the policy-decision-point (PDP), a modern, centralized, scalable, fine-grained, dynamic, context-aware, layer-7 aware, attribute-based access control system (ABAC) on a per-request basis using modular and composable Policies that enable you to write your policy-as-code using CEL as well as OPA (Open Policy Agent). You can read in detail about Policy management here. It's also noteworthy that Octelium intentionally has no notion whatsoever of an "admin" or "superuser" User. In other words, zero standing privileges are the default state and all permissions including those to the API Server can be restricted via Policies and are time/context-bound on a per-request basis.

-

Secretless access to upstreams Vigil is capable of injecting application-layer specific credentials required by the upstream protected resource on-the-fly, thus eliminating the need to share and manage such typically long-lived and over-privileged credentials as well as having to distribute them to Users who, in turn, must carry the burden of managing and storing them securely. For example, in HTTP-based Services this can be API keys and access tokens obtained from OAuth2 client credentials flows (read more here), in Kubernetes this can be kubeconfig files (read more here), in SSH it can be passwords or private keys (read more here), in Postgres-based and mySQL-based databases this can be passwords (read more here and here). Vigil can also inject mTLS keys used by any generic upstream that requires mTLS. This mechanism also allows you to grant access on a per-request basis to Users only via your Policies regardless of the permissions granted to the injected application-layer specific credentials used to access the upstream. You can read in detail about secretless access here.

-

Dynamic configuration and routing Octelium's application-layer awareness enables you to dynamically route to different upstreams (e.g. an API with multiple versions where each version is served by a different upstream), set different upstream L7 credentials corresponding to different upstream accounts and/or permissions, as well as set other layer-7 specific configurations depending on the mode (You can read more here). Furthermore, Octelium enables advanced L7-aware request/response manipulation for HTTP-based Service via extensible Lua and Envoy ExtProc plugins (read more here).

-

Visibility and auditing Vigil is built to be OpenTelemetry ready and emits in real-time access logs which not only clearly identify the subject (i.e. the User, their Session and Device if available) and resource represented by the Service, but can also provide you with application-layer specific details of the request (e.g. HTTP request such as paths and methods, PostgreSQL and MySQL database queries, etc...). You can read in detail about visibility and access logs here.

It's noteworthy to point out the mindful decision of separating Vigil, the policy enforcement point (PEP) from the Octovigil, the policy decision point (PDP). This architecture enables both components to horizontally scale independently from one another. Since the PDP needs to locally store possibly an immense amount of information about the subjects (i.e. Sessions, Users, Groups and Devices) and resources (i.e. Services and Namespaces) as well as the need to evaluate complex logic set in Policies, it could be very impractical in terms of resource usage to embed all such information inside every Vigil instance at scale.

From the Service to the Upstream

Once the request is authorized by the Service, it proceeds to the protected resource whose address can be either:

-

A static IP/FQDN private/public address that is directly reachable from within the Cluster (e.g. a Kubernetes service/pod running on the same cluster, a private resource running in the same private network (e.g. same AWS VPC) as the Cluster, a public SaaS API protected by an access token, etc...).

-

A static IP/FQDN private/public address that is remotely reachable through a connected User assigned to serve the Service. This opens the door to serving Services from anywhere outside the direct reach of the Cluster (e.g. private resources hosted by your laptop behind a NAT, Docker containers from anywhere, Kubernetes pods from other clusters, resources in multiple private clouds, etc...). In such case, the request proceeds from the Service to the address of the connected client whose Session is serving the resource over the corresponding WireGuard/QUIC tunnel. You can read more here.

-

The Octelium Cluster is also capable of acting as PaaS-like deployment platform by reusing the underlying Kubernetes infrastructure to automatically deploy and scale Dockerized images/containerized applications and serve them as Service upstreams. You can read in detail about managed containers here.

It is noteworthy that upstreams need to know absolutely nothing about the existence of the Cluster. From the upstream point of view, it's just another request coming from within its own network. That means that the entire resource-intensive process of access control as well as visibility is completely done within the Cluster.

A Platform on top of Kubernetes

So far, we have explained the data-plane flow from the User to the Service's upstream. However, to automate, manage and scale this process for a system with an arbitrary number of Services and Users we need to have a control-plane. This is somewhat similar to what Kubernetes does by building a control plane around containers but in our case the identity-aware proxy, Vigil, is the elementary unit in the platform instead of containers in the case of Kubernetes.

The Octelium Cluster uses Kubernetes as an infrastructure for itself to seamlessly operate as a scalable and reliable distributed system without any manual intervention from the Cluster administrators. For example, the Cluster administrators don't need to bother about how to deploy the identity-aware proxy components whenever they need to add a new Service. In other words, creating a Service using the Octelium Cluster APIs or via the octeliumctl CLI will automatically deploy all the underlying Kubernetes resources (e.g. pods that run Vigil containers) implementing that Service. This automatic orchestration provided by Kubernetes enables the Cluster administrators to forget about the operational side where the Cluster components can be managed, run and scaled up/down solely via the abstract Octelium Cluster APIs.

Moreover, using Kubernetes as infrastructure seamlessly enables Octelium Clusters to operate at any scale. For example, horizontally scaling Services translates to scaling Kubernetes Vigil pods/containers; adding a new Gateway to increase the overall traffic and throughout is automatically done by just adding a new Kubernetes node. Finally, using Kubernetes as an infrastructure platform for Octelium enables it to reuse the same infrastructure and effortlessly deploy your containerized applications and serve through typical Octelium Services without any manual Kubernetes management effectively providing PaaS-like capabilities (read more here).